How badly are MENA political scientists really doing?

Two new studies of journal publication trends shed more light on what is and isn't getting published, and why it matters.

How badly are MENA-focused political scientists really doing in the top journals? Is it getting any better? At what cost? Last year, Melani Cammett and Isabel Kendall published a widely read study of Middle East-focused articles in a set of top political science journals. They found that the proportion of MENA-focused articles was low but increasing, particularly driven by the broad interest across the discipline in the Arab uprisings and by the shifting methodological approaches by scholars of the region. One of their key takeaways was that MENA political science was “increasingly integrated in mainstream political science, with articles addressing core disciplinary debates and relying increasingly more on statistical and experimental methods. Yet, these shifts may come at the expense of predominantly qualitative research.”

I offered my thoughts on these trends in my introduction to our edited volume The Political Science of the Middle East. I had a similar but more optimistic reading based on a smaller basket of journals, focusing on trends over the years within those which tended to publish the type of work that MENA specialists did and also looking more broadly out into publishing in a wider range of journals and platforms. Like Cammett and Kendall, and many others, I also expressed concern about some of those trends, such as the diversion of research into particular countries (for safety or other reasons) and methods (such as experimental methods and analysis of off-the-shelf datasets).

In this context, it’s worth considering two major new contributions to the analysis of these trends: a critique of the Cammett/Kendall article by Andrea Teti and Pamela Abbott published two weeks ago, and an original analysis of a different set of journals by Mark Berlin and Anum Pasha Syed published last fall. Each article in its own way enriches our understanding of what MENA political science is really publishing when it’s publishing in political science journals… and, just as importantly, what it’s not doing.

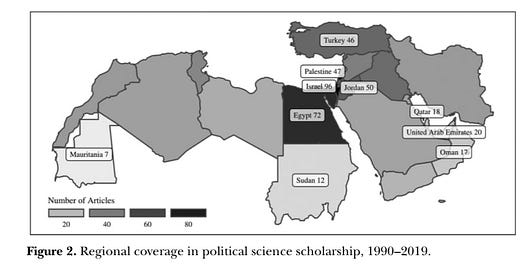

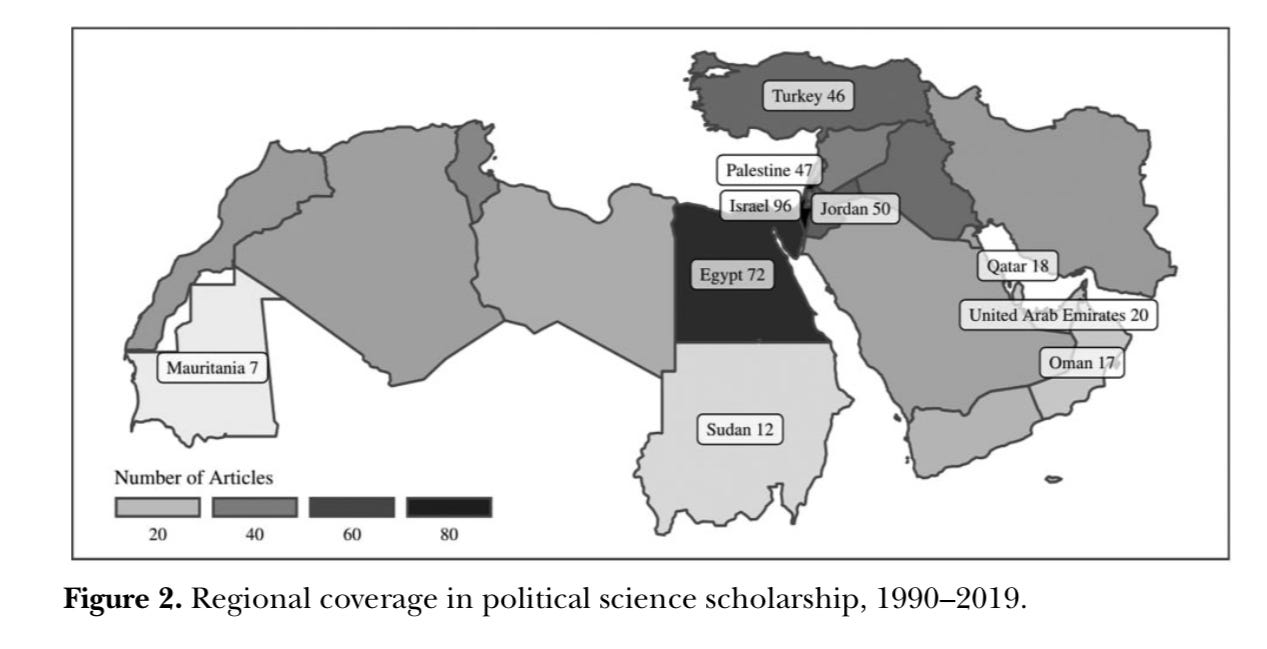

Let’s start with Berlin and Syed. Writing in International Studies Review, they look at nine top disciplinary journals over thirty years and identify 283 articles dealing with the Middle East, compared with the thirteen journals over twenty years examined by Cammett and Kendall.

It’s worth noting that neither dataset included highly ranked security-focused journals such as Security Studies, International Security, the Journal of Conflict Resolution or the Journal of Peace Research, which tend to publish quite a bit on the Middle East, which likely leads to both datasets significantly overstating the marginalization of MENA research. Nor does either include more theoretically eclectic disciplinary IR journals such as the European Journal of International Relations, Review of International Studies, International Theory, which may lead to overstating the dominance of quantitative methodologies. Unlike Cammett/Kendall, Berlin/Syed exclude Perspectives on Politics, a theoretically eclectic and highly ranked disciplinary journal which publishes quite a few MENA-focused articles. This is not to say that the choices of journals for each dataset is indefensible: both chose highly rated, influential disciplinary journals which any hiring or tenure committee would like to see. But it does seem likely to bias the results somewhat. I mean, am I really meant to be shocked if I’m told that that the American Journal of Political Science, the (pre-2020) American Political Science Review, and the Journal of Politics didn’t publish many (or any) non-quantitative articles on the Middle East? Knock me over with a feather. At the same time, as Neil Ketchley has pointed out, it’s not like quantitative work appears frequently in MENA area studies journals - maybe it’s just a rational sorting process?

Like Cammett and Kendall, Berlin and Syed find qualitative methods to be the most frequent approach, but with an increase over the last decade in publications employing quantitative and experimental methods. MENA focused articles doubled over the course of their study… but that’s from 1.6% in 1990 to 3.1% in 2019, hardly a strong showing. Their often creative decisions about what to look for and code from the articles in their dataset add a whole range of other interesting findings which I encourage you to check out. For instance, they find a striking neglect of the Maghreb and Arabian Peninsula cases, and of the many wars and conflicts not involving Israel or Iraq. They also record remarkably low attention to issues such as race and ethnicity, gender and identity politics, especially compared to the prevalence of such topics in non-disciplinary journals. They also ask whether episodic interest in particular events - the Gulf War, the Arab uprisings - is inflating these numbers, as opposed to better research by MENA political scientists or sustained and comprehensive interest in MENA politics for their own sake. And, in one especially useful if dispiriting exercise, they find that only 41% (!!) of the articles cite at least one source in a Middle Eastern language, with 45% of those being in Arabic and 33% in Hebrew but only 2% in Persian (despite some 30 articles using Iran as a case).

Now, let’s look at the just-published critique by Teti and Abbott, who recode the Cammett/Kendall dataset to argue that their analysis radically overstated the amount of qualitative research in these journals in ways which understates the degree of marginalization of non-quantitative work. They argue that the original coding inappropriately coded multiple articles using single case studies or small-n approaches as “qualitative.” Many of the case studies they re-examine actually use quantitative methods on that case, for instance, or mixed methods with some interviews for local color over a generally non-qualitative approach. Their critique is a bit overstated. Much of this derives from a somewhat absurdly narrow definition of what counts as qualitative, which begins with “drawing on evidence that cannot be reduced to quantified forms” and then further tightens to exclude articles that “did not specify a method and lacked rigor, transparency, and replicability.” Thus, obviously qualitative ethnographic research such as Sarah Parkinson’s is excluded because “interview data was not available.” Other articles which draw heavily on interviews and local context are dismissed as “descriptive.” Of 77 articles in the Cammett/Kendall data set coded as single or comparative case studies, only a single article is deemed to meet their standards for qualitative research. I don’t know. That magnitude of disagreement suggests that the problem lies more with their coding choices than with the actual research being done.

That said, my sense is that they capture something important in the bigger picture: a lot of “qualitative” articles pass muster in these journals by contorting themselves into adopting methods and standards which align with only certain types of research, which tends to exclude a lot of otherwise high quality forms of knowledge production. And the seal of approval conveyed by inclusion in these “top” journals validates work which other scholars who know the cases and issues well might not find very compelling

Beyond the interrogations of qualitative purity, Teti and Abbott put forward the really good and important question of “why methodologically sophisticated scholarship that exists outside of Political Science/International Relations top journals has not been published there.” Here they note the “paridigmatic poverty” of those journals and the extreme marginalization of topics and methods which stray far from the disciplinary core. I think they are on to something here. There is an enormous amount of interesting, richly empirical and theoretically innovative work about the Middle East being published in dozens of journals which seems to be excluded only (or at least primarily) from the core disciplinary journals. The work in those journals does seem to be far more diverse, and — to be honest — more interesting than what’s published in many of the top political science journals.

That, of course, immediately raises questions of what counts as “interesting”. So, for instance, one might argue that the political science discipline’s fetish for causal inference in recent years tended to rule out a wide range of research which otherwise addressed big questions with robust data; though, of course, those who prioritize causal inference concerns would claim that addressing them does make the research more interesting. Or one might point to the boomlet in calls for data transparency, which may have raised the bar for publication on authoritarian countries such as those in the Middle East (a possibility not precluded by the admirable success of some great scholars in clearing that bar). In one sense, even if one doesn’t personally find experimental work interesting, the trends could still be seen as a success story in terms of the evolution of the field, as a cohort of scholars found publishing success by actively seeking to meet disciplinary trends and the demands of those journals by shifting into the type of research that could meet their expectations.

These are interesting and important questions for our discipline, and all three of the studies reviewed here make significant contributions to our understanding of what’s really been happening in our discipline. I think that most of us in the field recognize these trends, and appreciate having the data to back it up. Problems that are recognized can be addressed. The POMEPS virtual research workshop (and, I have no doubt, other such initiatives) has had a good track record of helping junior scholars develop their articles for successful submission to top journals. And it’s noteworthy (and praiseworthy) that when the editors of Perspectives on Politics during the 2010s and the new American Political Science Review editorial team of the last few years actively recruited MENA scholars to submit their work, a significant number of them succeeded in getting published in those top journals.

And with that, let the disciplinary navel-gazing and ritual self-flagellation commence!